melonDS RSS

open_in_new https://melonds.kuribo64.net/rss.php

The latest news on melonDS.

Feed Info

[2026-02-04T14:10:54.734Z] Updated feed with 10 items

[https://melonds.kuribo64.net/rss.php] groups = Blogs

Copy Link

Grid View

List View

Flow View

blackmagic3 merged! -- by Arisotura

After much testing and bugfixing, the blackmagic3 branch has finally been merged. This means that the new OpenGL renderer is now available in the nightly builds.

Our plan is to let it cool down and potentially fix more issues before actually releasing melonDS 1.2.

So we encourage you to test out this new renderer and report any issues you might observe with it.

There are issues we're already aware of, and for which I'm working on a fix. For example, FMVs that rely on VRAM streaming, or Golden Sun. There may be tearing and/or poor performance for now. I have ideas to help alleviate this, but it'll take some work.

There may also be issues that are inherent to the classic OpenGL 3D renderer, so if you think you've encountered a bug, please verify against melonDS 1.1.

If you run into any problem, we encourage you to open an issue on Github, or post on the forums. The comment section on this blog isn't a great place for reporting bugs.

Either way, have fun!

Golden Sun: Dark Destruction -- by Arisotura

It's no secret that every game console to be emulated will have its set of particularly difficult games. Much like Dolphin with the Disney Trio of Destruction, we also have our own gems. Be it games that push the hardware to its limits in smart and unique ways, or that just like to do things in atypical ways, or just games that are so poorly coded that they run by sheer luck.

Most notably: Golden Sun: Dark Dawn.

This game is infamous for giving DS emulators a lot of trouble. It does things such as running its own threading system (which maxes out the ARM9), abusing surround mode to mix in sound effects, and so on.

I bring this up because it's been reported that my new OpenGL renderer struggles with this branch. Most notably, it runs really slow and the screens flicker. Since the blackmagic3 branch is mostly finished, and I'm letting it cool down before merging it, I thought, hey, why not write a post about this? It's likely that I will try to fix Golden Sun atleast enough to make it playable, but that will be after blackmagic3 is merged.

So, what does Golden Sun do that is so hard?

It renders 3D graphics to both screens. Except it does so in an atypical way.

If you run Golden Sun in melonDS 1.1, you find out that upscaling just "doesn't work". The screens are always low-res. Interestingly, in this scenario, DeSmuME suffers from melonDS syndrome: the screens constantly flicker between the upscaled graphics and their low-res versions. NO$GBA also absolutely hates what this game is doing.

So what is going on there?

Normally, when you do dual-screen 3D on the DS, you need to reserve VRAM banks C and D for display capture. You need those banks specifically because they're the only banks that can be mapped to the sub 2D engine and that are big enough to hold a 256x192 direct color bitmap. You need to alternate them because you cannot use a VRAM bank for BG layers or sprites and capture to it at the same time, due to the way VRAM mapping works.

However, Golden Sun is using VRAM banks A, B and C for its textures. This means the standard dual-screen 3D setup won't work. So they worked around it in a pretty clever way.

The game renders a 3D scene for, say, the top screen. At the same time, this scene gets captured to VRAM bank D. The video output for the bottom screen is sourced not from the main 2D engine, but from VRAM - from bank D, too. That's the trick: when using VRAM display, it's possible to use the same VRAM bank for rendering and capturing, because both use the same VRAM mapping mode. In this situation, display capture overwrites the VRAM contents after they're sent to the screen.

At this point, the bottom screen rendered a previously captured frame, and a new 3D frame was rendered to VRAM, but not displayed anywhere. What about the top screen then?

The sub 2D engine is configured to go to the top screen. It has one BG layer enabled: BG2, a 256x256 direct color bitmap. VRAM bank H is mapped as BG memory for the sub 2D engine, so that's where the bitmap is sourced from.

Then for each frame, the top/bottom screens there are swapped, and the process is repeated.

But... wait?

VRAM bank H is only 32K. You need atleast 96K for a 256x192 bitmap.

That's where this gets fun! Every time a display capture is completed, the game transfers it to one of a set of two buffers in main RAM. Meanwhile, the other buffer is streamed to VRAM bank H, using HDMA. Actually, only a 512 byte part of bank H is used - the affine parameters for BG2 are set in such a way that the first scanline will be repeated throughout the entire screen, and that first scanline is what gets updated by the HDMA.

All in all, pretty smart.

The part where it sucks is that it absolutely kills my OpenGL renderer.

That's because the renderer has support for splitting rendering if certain things are changed midframe: certain settings, palette memory, VRAM, OAM. In such a situation, one screen area gets rendered with the old settings, and the new ones are applied for the next area. Much like the blargSNES hardware renderer.

The renderer also pre-renders BG layers, so that they may be directly used by the compositor shader, and static BG layers don't have to be re-decoded every time.

But in a situation like the aforementioned one, where content is streamed to VRAM per-scanline, this causes rendering to be split into 192 sections, each with their own VRAM upload, BG pre-rendering and compositing. This is disasterous for performance.

This is where something like the old renderer approach would shine, actually.

I have some ideas in mind to alleviate this, but it's not the only problem there.

The other problem is that it just won't be possible to make upscaling work in Golden Sun. Not without some sort of game-specific hack.

I could fix the flickering and bad performance, but at best, it would function like it does in DeSmuME.

The main issue here is that display captures are routed through main RAM. While I can relatively easily track which VRAM banks are used for display captures, trying to keep track of this through other memory regions would add too much complexity. Not to mention the whole HDMA bitmap streaming going on there.

At this rate it's just easier to add a specific hack, but I'm not a fan of this at all. We'll have to see what we do there.

Still, I appreciate the creativity the Golden Sun developers have shown there.

Becoming a master mosaicist -- by Arisotura

So basically, mosaic is the last "big" feature that needs to be added to the OpenGL renderer...

Ah, mosaic.

I wrote about it here, back then. But basically, while BG mosaic is mostly well-behaved, sprite mosaic will happily produce a fair amount of oddities.

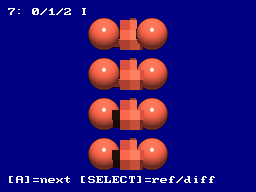

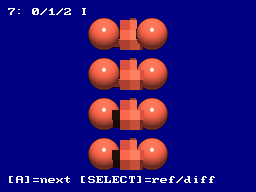

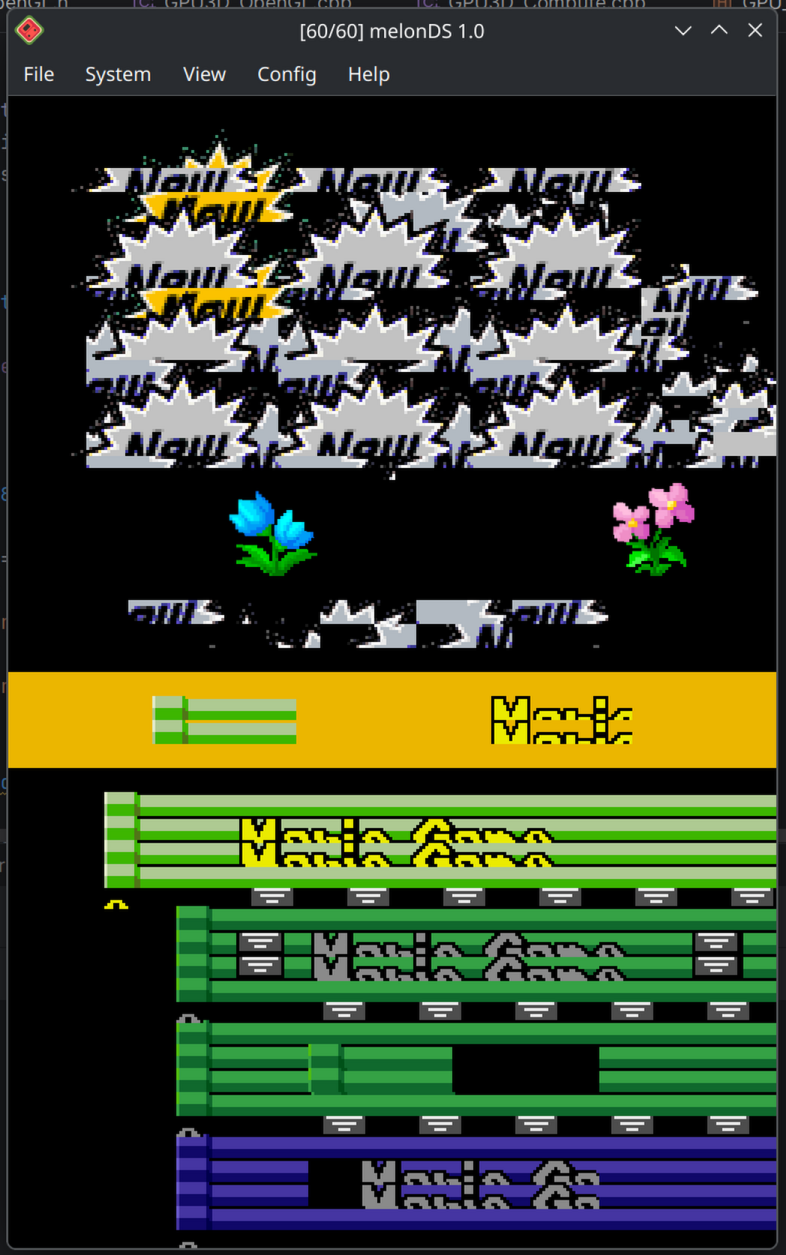

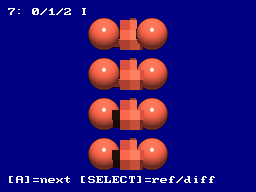

Actual example, from hardware:

I had already noticed this back then, as I was trying to perfect sprite mosaic, but didn't quite get there. I ended up putting it on the backburner.

Fast forward to, well, today. As I said, I need to add mosaic support to the OpenGL renderer, but before doing so, I want to be sure to get the implementation right in the software renderer.

So I decided to tackle this once and for all. I developed a basic framework for graphical test ROMs, which can setup a given scene, capture it to VRAM, and compare that against a reference capture acquired from hardware. While the top screen shows the scene itself, the bottom screen shows either the reference capture, or a simple black/white compare where any different pixels immediately stand out.

I built a few test ROMs out of this. One tests various cases, ranging from one sprite to several, and even things like changing REG_MOSAIC midframe. One tests sprite priority orders specifically. One is a fuzzing test, which just places a bunch of sprite with random coordinates and priority orders.

I intend to release those test ROMs on the board, too. They've been pretty helpful in making sure I get the details of sprite mosaic right. Talking with GBA emu developers was also helpful.

Like a lot of things, the logic for sprite mosaic isn't that difficult once you figure out what is really happening.

First thing, the 2D engine renders sprites from first to last. This kind of sucks from a software perspective, because sprite 0 has the highest priority, and sprite 127 the lowest. For this reason, melonDS processed sprites backwards, so that they would naturally have the correct priority order without having to worry about what's already in the render buffer. But that isn't accurate to hardware. This part will be important whenever we add support for sprite limits.

What is worth considering is that when rendering sprites, the 2D engine also keeps track of transparent sprite pixels. If a given sprite pixel is transparent, the mosaic flag and BG-relative priority value for this pixel are still updated. However, while opaque sprite pixels don't get rendered if a higher-priority opaque pixel already exists in the buffer, transparent pixels end up modifying flags even if the buffer already contains a pixel, as long as that pixel isn't opaque. This quirk is responsible for a lot of the weirdness sprite mosaic may exhibit.

The mosaic effect itself is done in two different ways.

Vertical mosaic is done by adjusting the source Y coordinate for sprites. Instead of using the current VCount as a Y coordinate, an internal register is used, and that register gets updated every time the mosaic counter reaches the value set in REG_MOSAIC. All in all, fairly simple.

Horizontal mosaic, however, is done after all the sprites are rendered. The sprite buffer is processed from left to right. For each pixel, if it meets any of a few rules, its value is copied to a latch register, otherwise it is replaced with the last value in the latch register. The rules are: if the horizontal mosaic counter reaches the REG_MOSAIC value, or if the current pixel's mosaic flag isn't set, or if the last latched pixel's mosaic flag wasn't set, or if the current pixel has a lower BG-relative priority value than the last latched pixel.

Of course, there may be more interactions we haven't thought of, but these rules took care of all the oddities I could observe in my test cases.

Now, the fun part: implementing this shit into the OpenGL renderer is going to be fun. As I said before, while this algorithm makes sense if you're processing pixels from left to right, that's not how GPU shaders work. I have a few ideas in mind for this, but the code isn't going to be pretty.

But I'm not quite there yet. I still want to make test ROMs for BG mosaic, to ensure it's also working correctly, and also to fix a few remaining tidbits in the software renderer.

Regardless, it was fun doing this. It's always satisfying when you end up figuring out the logic, and all your tests come out just right.

blackmagic3: refactor finished! -- by Arisotura

The blackmagic3 branch, a perfect example of a branch that has gone way past its original scope. The original goal was to "simply" implement hi-res display capture, and here we are, refactoring the entire thing.

Speaking of refactor, it's mostly finished now. For a while, it made a mess of the codebase, with having to move everything around, leaving the codebase in a state where it wouldn't even compile, it even started to feel overwhelming. But now things are good again!

As I said in the previous post, the point of the refactor was to introduce a global renderer object that keeps the 2D and 3D renderers together. The benefits are twofold.

First, this is less prone to errors. Before, the frontend had to separately create 2D and 3D renderers for the melonDS core, with the possibility of having mismatched renderers. Having a unique renderer object avoids this entirely, and is overall easier to deal with for the frontend.

Second, this is more accurate to hardware. Namely, the code structure more closely adheres to the way the DS hardware is structured. This makes it easier to maintain and expand, and more accurate in a natural kind of way. For example, implementing the POWCNT1 enable bits is easier. The previous post explains this more in detail, so I won't go over it again.

I've also been making changes to the way 2D video registers are updated, and to the state machine that handles video timing, with the same aim of more closely reflecting the actual hardware operation. This will most likely not result in tangible improvements for the casual gamer, but if we can get more accurate with no performance penalty, that's a win. Reason is simple: in emulation, the more closely you follow the original hardware's logic and operation, the less likely you are to run into issues. But accuracy is also a tradeoff. I could write a more detailed post about this.

If there are any tangible improvements, they will be about the mosaic feature, especially sprite mosaic, which melonDS still doesn't handle correctly. Not much of a big deal, mosaic seems seldom used in DS games...

Since we're talking about accuracy, it brings me to this issue: 1-byte writes to DISPSTAT don't work.

A simple case: 8-bit writes to DISPSTAT are just not handled. I addressed it in blackmagic3, as I was reworking that part of the code. But it's making me think about better ways to handle this.

As of now, melonDS has I/O access handlers for all possible access types: 8-bit, 16-bit, 32-bit, reads and writes. It was done this way so that each case could be implemented correctly. The issue, however, is that I've only implemented the cases which I've run into during my testing, since my policy is to avoid implementing things without proper testing.

Which leads to situations like this. It's not the first time this happens, either.

So this has me thinking.

The way memory reads work on hardware depends on the bus width. On the DS, some memory regions use a 32-bit bus, others use a 16-bit bus.

So if you do a 16-bit read from a 32-bit bus region, for example, it is internally implemented as a full 32-bit read, and the unneeded part of the result is masked out.

Memory writes work in a similar manner. What comes to my mind is the FPGA part of my WiiU gamepad project, and in particular how the SDRAM there works, but the SDRAM found in the DS works in a pretty similar way.

This type of SDRAM has a 16-bit data bus, so any access will operate on a 16-bit word. Burst modes allow accessing consecutive addresses, but it's still 16 bits at a time. However, there are also two masking inputs: those can be used to mask out the lower or upper byte of a 16-bit word. So during a read, the part of the data bus which is masked out is unaffected, and during a write, it is ignored, meaning the corresponding bits in memory are untouched. This is how 8-bit accesses are implemented.

On the DS, I/O regions seem to operate in a similar fashion: for example, writes smaller than a register's bit width will only update part of that register. However, I/O registers are not just memory: there are several of them which can trigger actions when read or written to. There are also registers (or individual register bits) which are read-only, or write-only. This is the part that needs extensive testing.

As a fun example, register 0x04000068: DISP_MMEM_FIFO.

This is the feature known as main memory display FIFO. The basic idea is that you have DMA feed a bitmap into the FIFO, and the video hardware renders it (or uses it as a source in display capture). Technically, the FIFO is implemented as a small circular buffer that holds 16 pixels.

So writing a 32-bit word to DISP_MMEM_FIFO stores two pixels in the FIFO, and advances the write pointer by two. All fine and dandy.

However, it's a little more complicated. Testing has shown that DISP_MMEM_FIFO is actually split into different parts, and reacts differently depending on which parts are accessed.

If you write to it in 16-bit units, for example, writing to the lower halfword stores one pixel in the FIFO, but doesn't advance the write pointer - so subsequent writes to the same address will overwrite the same pixel. Writing to the higher halfword (0x0400006A) stores one pixel at the next write position (write pointer plus one), and advances the write pointer by two.

Things get even weirder if you write to this register in 8-bit units. Writing to the first byte behaves like a 16-bit write to the lower halfword, but the input value is duplicated across the 16-bit range (so writing 0x23 will store a value of 0x2323). Writes to the second byte are ignored. Writes to the third byte behave like a 16-bit write to the upper halfword, with the same duplicating behavior, but the write pointer doesn't get incremented. Writing to the fourth byte increments the write pointer by two, but the input value is ignored.

You can see how this register was designed to be accessed in either 32-bit units, or sequential 16-bit units, but not really 8-bit units - those get weird.

Actually, this kind of stuff looks weird from a software perspective, but if you consider the way the hardware works, it makes sense.

So this is another area of melonDS that likely warrants some refactoring and intensive testing. Would be great to finally close all the gaps in I/O handling once and for all. But this won't be part of blackmagic3 - it's way beyond the scope.

As far as blackmagic3 is concerned, we're getting pretty close now. I still need to add mosaic support, the various bits like "forced blank", and do a bunch of cleanup, optimization, ...

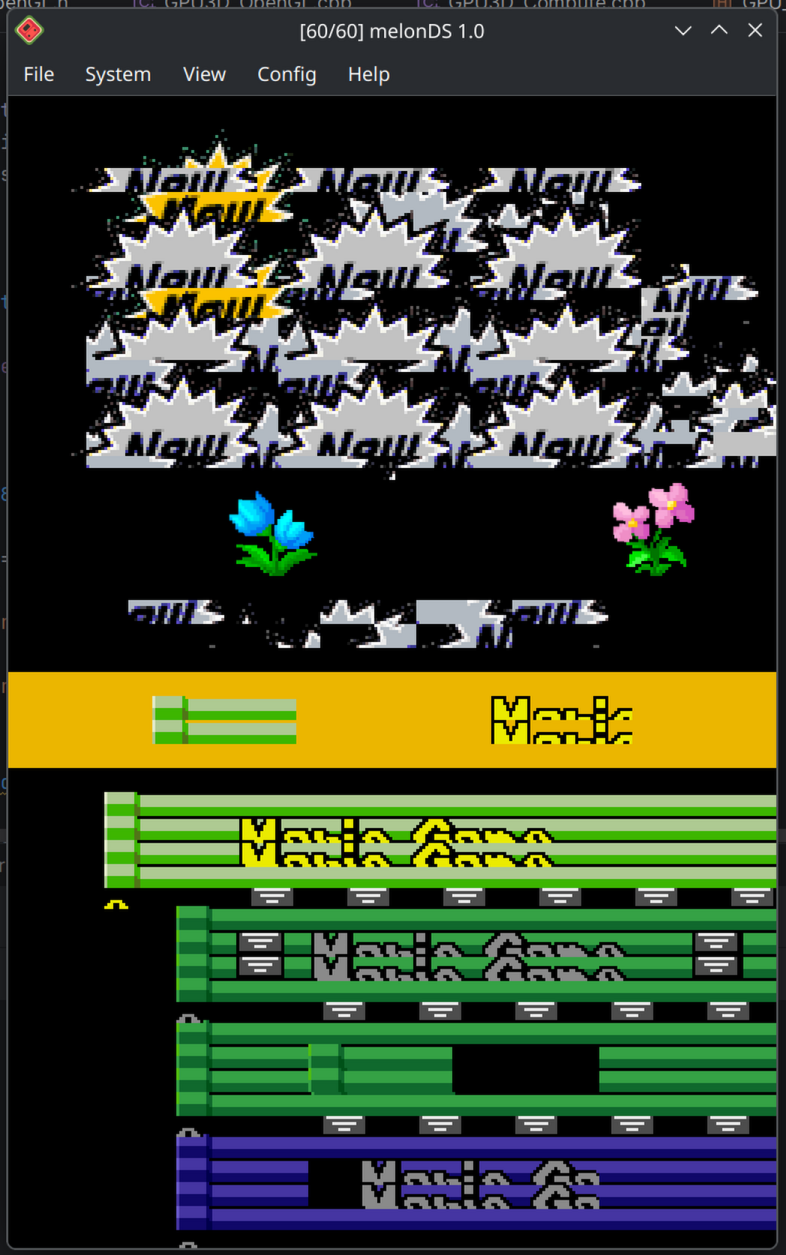

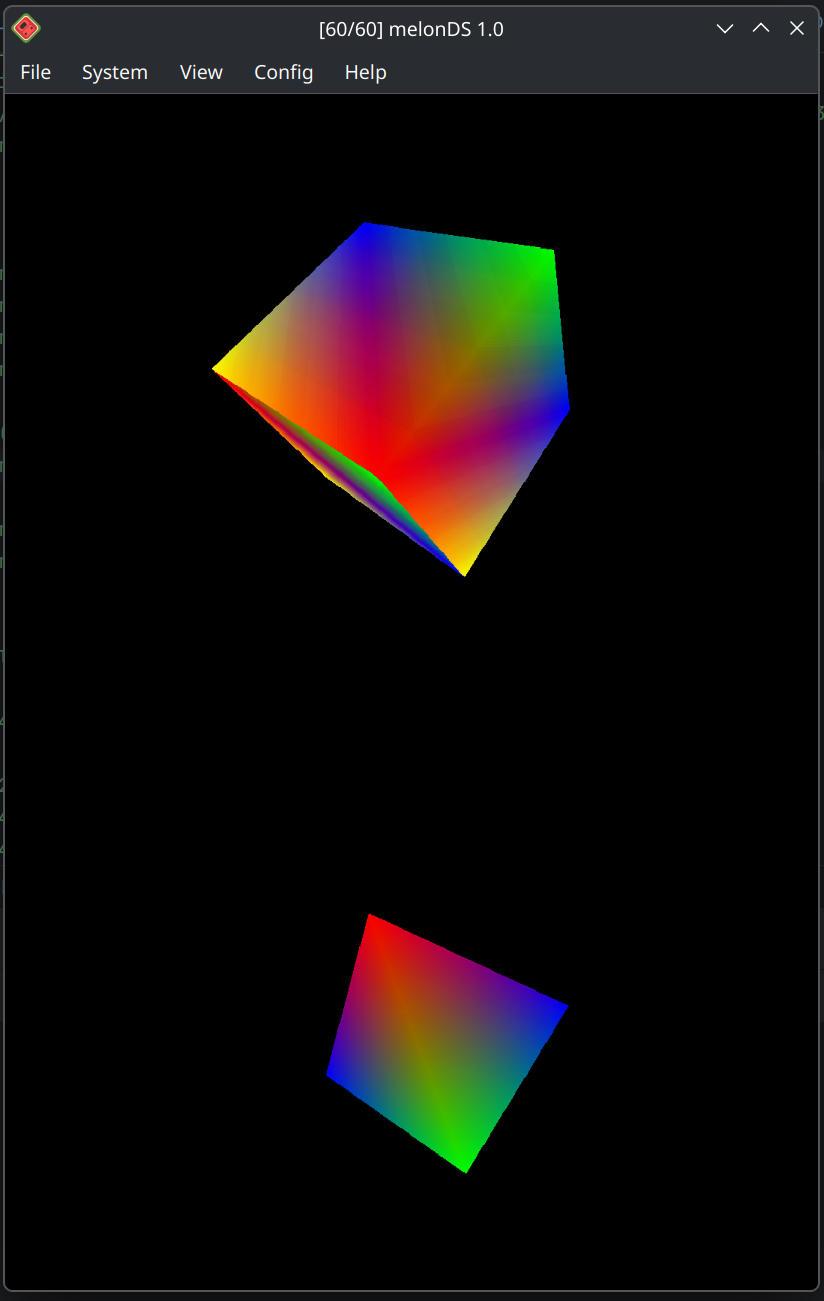

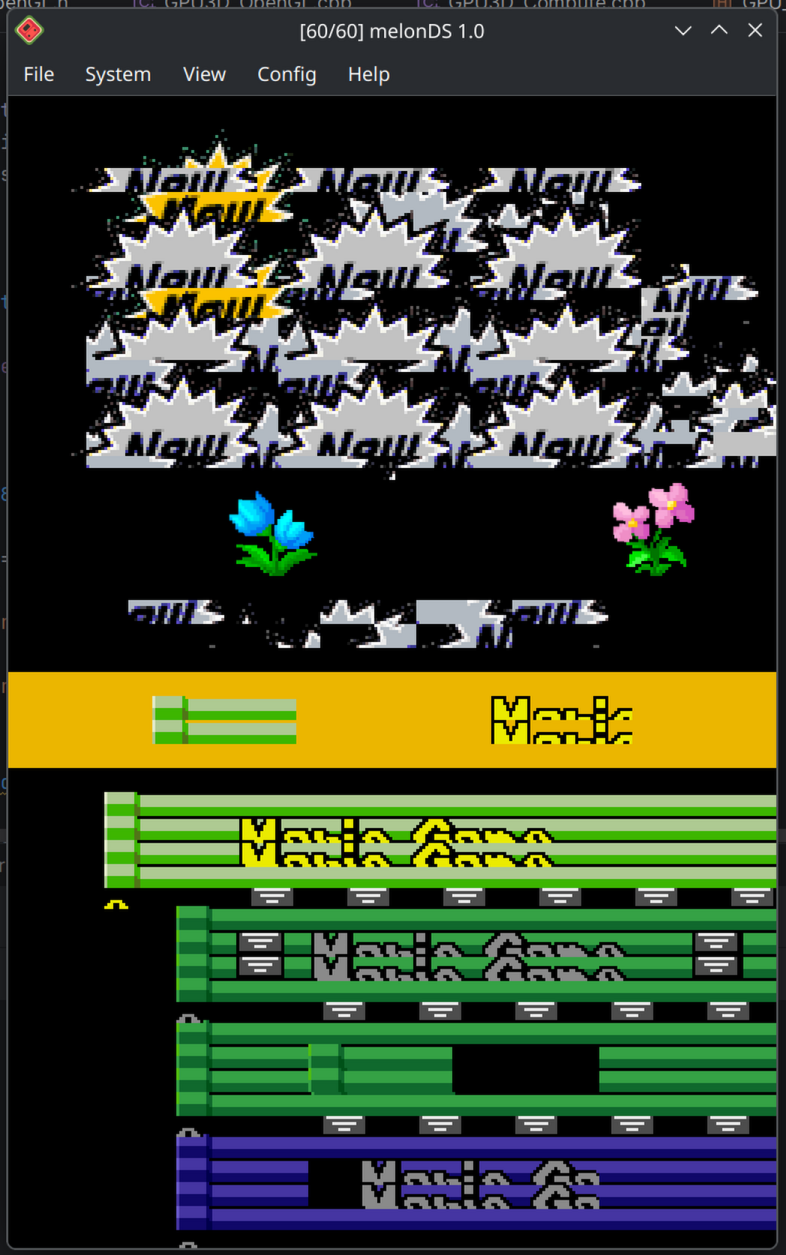

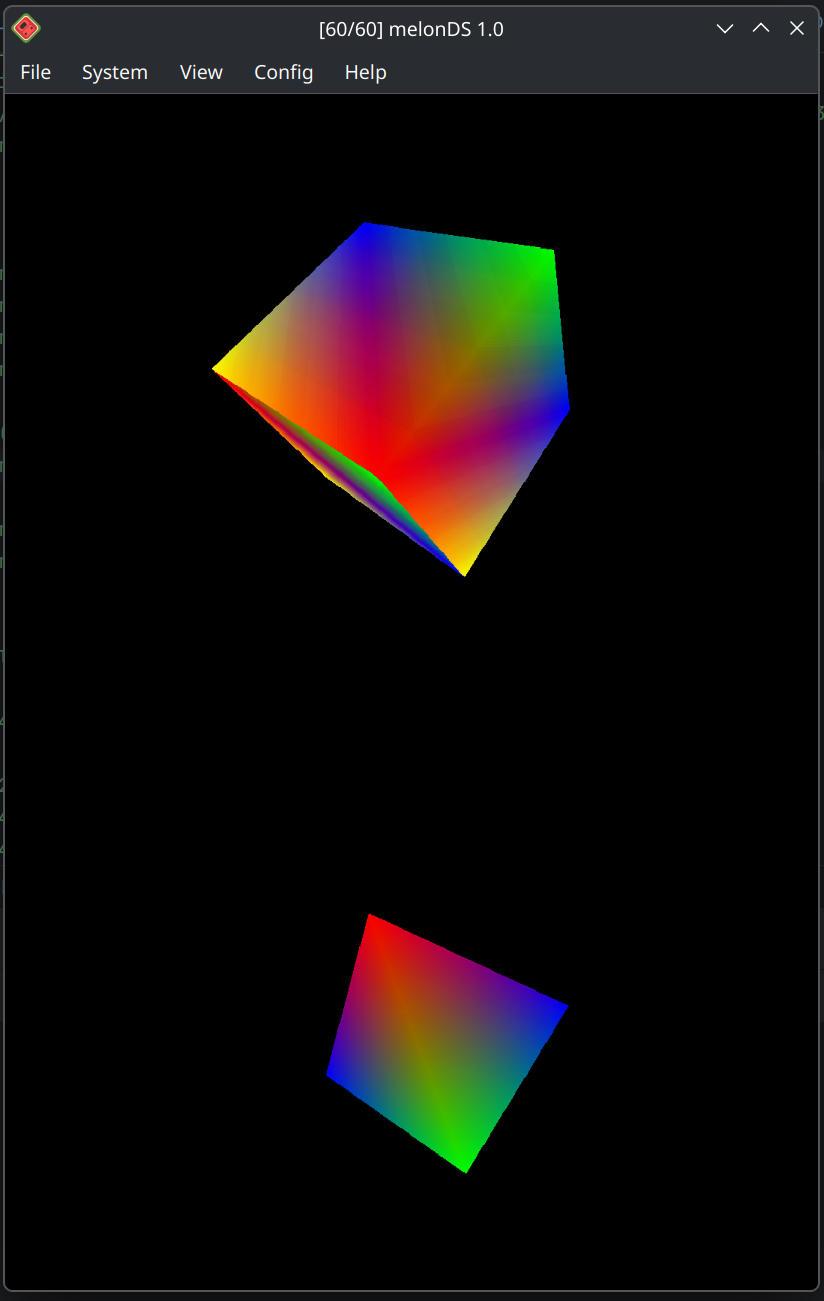

As a bonus, have an "epic fail" screenshot.

Between things like this, and melonDS crashing in RenderDoc due to uninitialized variables, it's been a bit rocky to get the renderer working again...

OpenGL renderer: status update, and "fun" stuff -- by Arisotura

First of all, we wish you a happy new year. May 2026 be filled with success.

Next, well... DS emulation, oh what fun.

It all started as I was contemplating what was left to be done with the OpenGL renderer. At this point, I've mostly managed to turn this pile of hacks into an actual, proper renderer. While there's still a lot of stuff to verify, clean up, optimize, the core structure is there. It may not support every edge case, but I believe it will do a pretty decent job.

The main remaining things to do are: add mosaic, add the "forced blank" and POWCNT1 2D engine enable flags, and restructure the 2D rendering code. As it turns out, those things are somewhat interconnected.

Mosaic was discussed here. Basically, it applies a pixelation effect to 2D graphics. BG mosaic shouldn't be difficult to add to the OpenGL renderer, but sprite mosaic is going to be tricky, because the way it works makes sense if you're processing pixels from left to right, but that's not how GPU shaders work. It will probably require some shitty code to get it right.

"Forced blank" is a control bit in DISPCNT. What it does is force the 2D engine to render a blank picture, which allows the CPU to access VRAM faster.

POWCNT1 made an appearance here. This register has enable bits for the two 2D engines, which have a bit of a similar effect to the aforementioned "forced blank" bit. However it's not quite the same.

For example, I was testing all those features to make sure I understood them correctly before implementing them, and it highlighted some shortcomings in melonDS's software renderer.

Take affine BG layers. The way they work is that you have reference point registers (BGnX and BGnY) determining which point of the layer will be in the screen's top-left corner, then you have a 2x2 matrix (BGnPA to BGnPD) which transforms screen coordinates to layer coordinates. This is how arbitrary rotation and scaling is created. It is similar to mode 7 on the SNES.

Reference point registers have a peculiarity, though. They are stored in internal registers which are updated at every scanline, and reloaded at the end of the VBlank period (ie. before a new frame). When you write to one of the reference point registers, the value goes in both the internal register and the reload register. This means that if you write to those registers during active display, the values you write set the origin point for the next scanline, not for the first scanline. It's important to get this right, as games may modify the reference point registers with HDMA to do fun effects.

The part melonDS currently misses, is that those reference point registers are updated even if the DISPCNT "forced blank" bit is set. However, they don't get updated if the 2D engine is entirely disabled via POWCNT1.

This means that, say you were to toggle the 2D engine on and off during active display, affine layers would end up displaced, but this wouldn't occur with the "forced blank" bit. It's a bit of an extreme edge case, I don't know of any games that do that, but you never know what they might do...

Mosaic also works in a similar way. Vertical mosaic is implemented with counters which are updated at every scanline, and reset at the end of the VBlank period. The counters simply alter the base Y position used to render BG layers or sprites.

There's also an amusing difference in how they work, which can show if you change the MOSAIC register during active display. For both BG and sprite mosaic, the counter gets reset when it reaches the target value in MOSAIC. However, for BG mosaic, that target value is copied to an internal register, while for sprite mosaic it is always checked directly against MOSAIC. This means that if the counter happens to be greater than the new target value, it will count all the way to 15 and wrap to 0, resulting in some pretty tall "pixels". BG mosaic doesn't exhibit this quirk due to the internal register.

Anyway, similarly to the affine reference point registers described above, messing with POWCNT1 can also cause the 2D engine not to reset its mosaic counters before a new frame, which can cause the mosaic effect to shimmer vertically.

It is also likely that windows are affected in similar ways, but I haven't checked them yet.

All in all, I don't think this research of edge cases is going to be very useful for emulating commercial games, but it's always interesting and helps understand how the hardware is laid out, how everything interacts, and so on. It also helps cover some doubts that are inevitably raised while implementing new features.

Which brings me to the last item: restructuring the 2D renderer code.

The way the renderer code in melonDS was laid out was originally fairly simple: GPU had the "base" stuff (framebuffers, VRAM, video timing emulation), GPU3D had the 3D geometry engine (transforms and lighting, polygon setup), GPU3D_Soft had the 3D rendering engine (rasterizer), and GPU2D received anything pertaining to 2D rendering.

However, things have evolved since then.

While the 3D side was made to be modular, to eventually allow for hardware renderers, the 2D side was pretty much, well, set in stone. It wasn't made to be replaced.

melonDS followed the design of older DS emulators. In the past, it made sense to use OpenGL for 3D rendering, because at a surface level, the DS's 3D functionality maps fairly well to it. However, 2D rendering is another deal entirely. The tile engines do not map well to OpenGL. If today, it's possible to leverage shaders to implement a lot of the functionality on the GPU, this was unthinkable in 2005. So it makes sense the old DS emulators were designed this way: OpenGL for 3D graphics, software scanline-based renderer for 2D graphics.

However, it's not 2005 anymore. In the past, Generic restructured the 2D renderer code to make it modular, so he could implement better renderers for his Switch port.

And now, I'm doing the same thing. As I've discussed before, my old approach to OpenGL rendering was a hack - it allowed me to reuse the old 2D renderer with minimal modifications, but it was pretty limited, which is why I've since changed gears, and started implementing an actual OpenGL renderer.

And I'm not a fan of how things work now. Right now, the emulator frontend is responsible for creating appropriate 3D and 2D renderers and passing them to the core. It allows to support platform-specific renderers (like the Deko3D renderers on the Switch), but I don't like that it's technically possible to create mismatched renderers. Because the renderer I'm working on is only compatible with the OpenGL-based 3D renderers, it makes no sense to be able to mix and match them. My idea was to have a general renderer object which includes both, and avoids this possibility.

This also brings me to another fact: the rendering pipeline in melonDS doesn't accurately model DS hardware. I mean, it does its job quite well, but the way the modules are laid out doesn't really match the DS. I've been aware of this for years, but at the time it was easy to ignore - but now, it's more evident than ever.

Basically, in the DS, you find the following basic blocks (bar the 3D side):

* 2D engines A and B: those are the base tile engines. They composite BG layers and sprites, with effects such as blending, fade, windows, mosaic.

* LCD controller (LCDC): receives input from the 2D engines and sends it to the screens. It can apply its own fade effect (master brightness). It also handles VRAM display, the main memory display FIFO, and display capture.

The split is pretty clear if you observe which features are affected or not by POWCNT1. And it makes it clearer than ever, for example, that display capture is not part of 2D engine A, and doesn't belong in GPU2D.

Thus, it appears that restructuring this code properly will kill two birds with one stone.

This code restructuring may not sound very exciting, but it's good to have if it helps keep things clear, and often, adhering to the hardware's basic layout makes it easier to model things accurately.

As a bonus: since I was busy testing things on hardware, we went down the DS hardware rabbit hole again, and ended up testing things related to the VCOUNT register.

You might be already aware if you follow this blog, but: on the DS, the VCOUNT register is writable. This register keeps track of the vertical retrace position, but it is possible to modify it. The typical use is for syncing up consoles during local multiplayer, but it can also be used to alter the video framerate. The basic way it works is that writing to VCOUNT sets the value it will take after the current scanline ends.

melonDS has basic support for this, but we haven't dared dig into the details. And for good reason.

A few fun details, for example.

VCOUNT is 9 bits wide. The range is 0-262. You would think that if you write a value outside this range, it would detect the error, right?

Right?

Hahahahahahahahahahahahaahahaahh.

In typical DS fashion, this register accepts whatever values. You can set it to anything between 263 and 511. It will just keep counting until 511, and wrap to 0. We even found out that doing so can suppress the next frame's VBlank IRQ. Things like this happen because many video-related events are tied to VCOUNT reaching specific values.

Basically, the writable VCOUNT register is an endless rabbit hole of weirdness, and I'm not sure how much of it is realistic to emulate. VCOUNT affects not only the rendering logic, but also the signal that gets sent to the LCDs. Some manipulations can cause weird scanline effects, or cause the LCDs to fade out. How would one even emulate that? Even if we ignore the visual effects, we might need to look at the LCD sync signals with an oscilloscope to figure out what is happening.

Merry Christmas from melonDS -- by Arisotura

I know, I'm one day late.

There won't be anything very fancy for now, though. I haven't worked much on melonDS lately. Over the last week, my training period has been intense, and the long commute didn't help either. However, that went quite well, so I'm hopeful.

Another thing is, there isn't much more to show at this point.

What remains to be done is a bunch of cleanup, optimization work, and adding certain missing features, like proper chunked rendering if certain registers are changed mid-frame. Much like what the blargSNES hardware renderer does. Basically, turning this whole experimental setup into a finished product. It's always nice, but it doesn't really make for interesting screenshots.

As far as the 3D renderers are concerned, my work is mostly finished. I have ideas to improve the regular OpenGL renderer, but that's beyond the scope of the blackmagic3 branch for now. I could mention some, though: for example, attempting to add proper interpolation, instead of the "improved polygon splitting" hack. There would be some other old issues to fix in order to bring both OpenGL renderers closer to the software renderer, too.

With all this, I hope to be able to release melonDS 1.2 by early 2026. We'll see how this goes.

Either way, merry Christmas, happy new year, and all!

Why make it simple when it can be complicated? -- by Arisotura

First, a bit of a status update. I'm about to begin a training period of sorts, that will (hopefully!) lead to an actual job next year. It looks like a pretty nice job for me, too!

So that part is sorted out, for now.

Next, the OpenGL renderer is still in the works, although I've been taking a bit of a break from it.

But hey, when you happen to be stuck on a train for way longer than planned, what can you do? That's right, add features to your renderer! The latest fun feature I added is also the reason behind this post title.

The DS's 3D renderer has registers that set the clear color and depth, and a bunch of other clear attributes - basically what the color/depth/attribute buffers will be initialized to before drawing polygons. Much like glClearColor() and glClearDepth().

However, you can also use a clear bitmap. In this case, two 128K "slots" of texture VRAM (out of a total of 4) will be used to initialize the color and depth buffers: slot 2 for the color buffer, slot 3 for the depth buffer. The bitmaps are 256x256, and they can be scrolled. It isn't a widely used feature, but there are games that use it. As far as melonDS is concerned, the software renderer supports it, but it had always been missing from the OpenGL renderers.

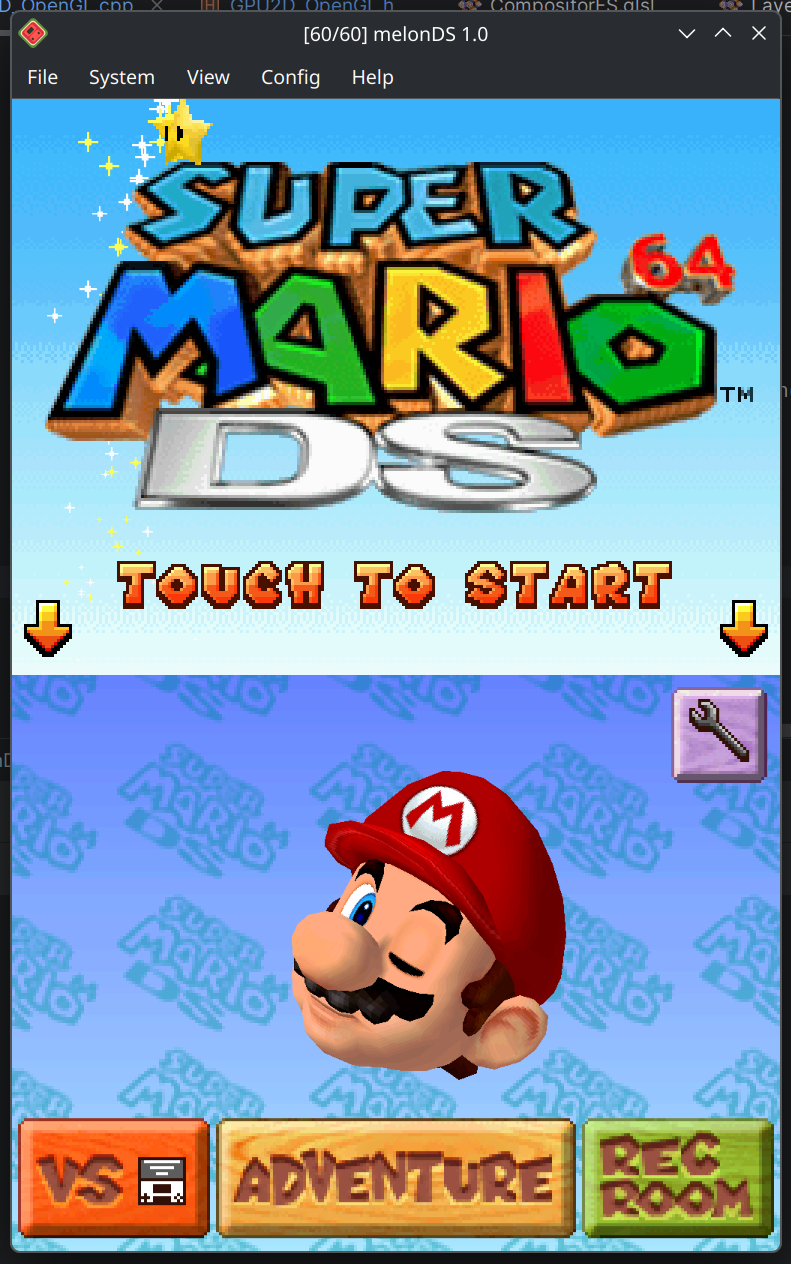

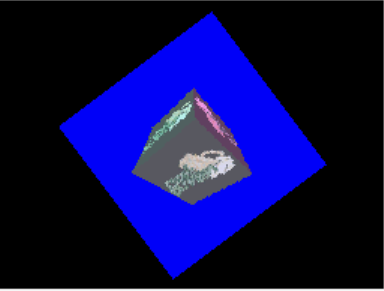

Since I was busy adding features to the compute shader renderer, I thought, hey, why not add this? Shouldn't be very difficult. And indeed, it wasn't. But the game I used to test it threw me for a loop.

This is Rayman Raving Rabbids 2. In particular, this is the screen that shows up after you've played one of the minigames. The bottom screen uses the clear bitmap feature - without it, the orange frame layer won't show up at all.

When I was debugging my code, I looked at my clear bitmap textures in RenderDoc, and expected to find the orange frame in the color texture, but there was nothing. For a while, I thought I had missed something, because I expected that layer to be in there. But it wasn't the case.

That layer is actually a 2D layer (BG1). The clear bitmap feature is used to block the 3D scene (including the blue background) where the orange frame would appear. To do this, they just use a depth bitmap. There's nothing mapped to texture slot 2, so the color bitmap is all zeroes (and therefore completely transparent).

I also noticed the glitchy pixels on the right and bottom edges, and that had me chasing a phantom bug for a while - not a bug in melonDS, the edges of the clear bitmap are just uninitialized. It looks like someone at Ubisoft goofed up their for loops.

This made me wonder why the developers went for this approach, given how much VRAM it takes up.

When they could just have, you know... changed the priority orders to have BG1 cover the 3D scene. No need for a clear bitmap!

Another possibility was to draw this orange frame with the 3D engine. This would even have enabled them to add a shadow between it and the background, like they did on the top screen... maybe that was what they wanted to go for.

But this is the viewpoint of someone who knows the DS hardware pretty well, so... yeah. I realize not all developers would have this much knowledge. And hey, I won't complain -- the diversity in approaches helps test more possible use cases!

I could also talk about why I was messing with the compute shader renderer in the first place.

Remember this? That was my little render-to-texture demo.

I wanted to try adding support for hi-res capture textures in the 3D renderers. Reason why I started with the compute renderer is that it already has a texture cache, and thus does texturing in a clean way.

On the other hand, the regular OpenGL renderer just streams raw DS VRAM to the GPU and does all the texture addressing and decoding in its fragment shaders. It's the lazy approach to texturing: it works, it's simple to implement, but it's also suboptimal. It's a lot of redundant work done on the GPU that could be avoided by having it precomputed (ie. what the texture cache does), and it makes it harder to add support for things like texture filtering.

So, you guess: I want to backport the texture cache to the regular OpenGL renderer before I start to work on that one. There are also other improvements I want to make in there, too.

The texture cache could even benefit the software renderer, too.

Anyway, fun shit!

Hardware renderer progress -- by Arisotura

Hey hey, little status update! I've been having fun lately.

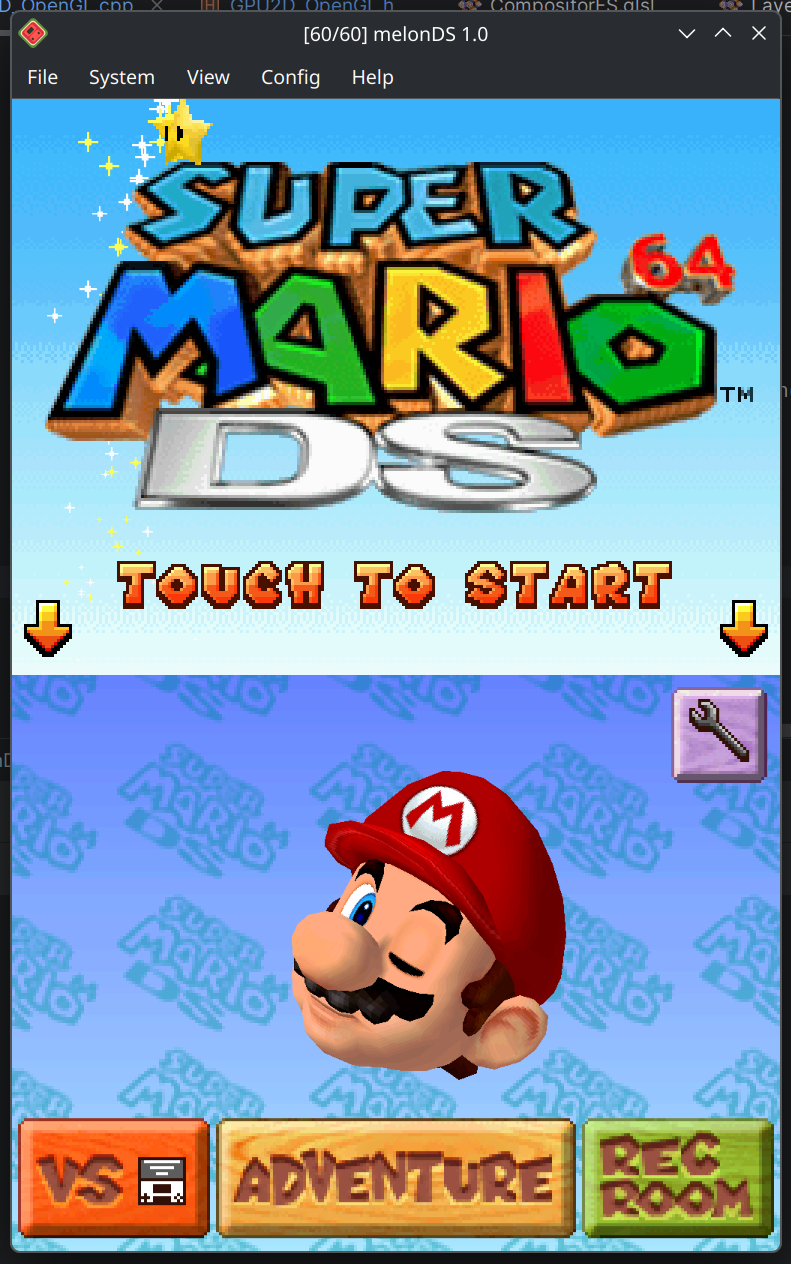

The hardware renderer has been progressing nicely lately. It's always exciting when you're able to assemble the various parts you've been working on into something coherent, and it suddenly starts looking a lot like a finished product. It's no longer just something I'm looking at in RenderDoc.

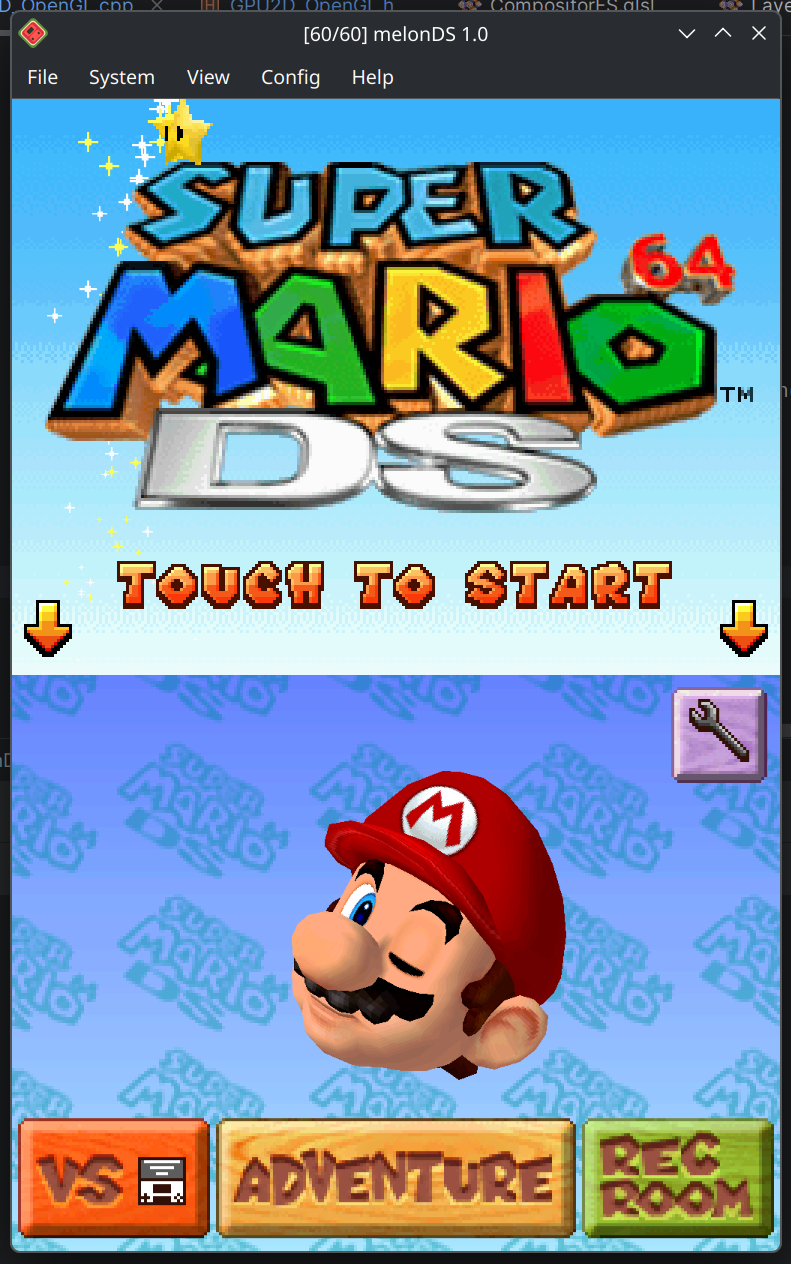

Those screenshots were taken with 4x upscaling (click them for full-size versions). The last one demonstrates hi-res rotscale in action. Not bad, I dare say.

It's not done yet, though, so I'll go over what remains to be done.

Mosaic

Shouldn't be very difficult to add.

Except for, you know, sprite mosaic. I don't really know yet how I'll handle that one. The way it works is intuitive if you're processing pixels left-to-right within a scanline, but this isn't how a modern GPU works.

Display capture

What was previously in place was a bit of a hack made to work with the old approach, so I will have to rework this. Atleast now I have the required system for tracking VRAM banks for this. But the whole part of capturing video output to OpenGL textures, and reusing them where needed, will need to be redone.

I also need to think of a way to sync hi-res captures back to emulated VRAM when needed.

Of course, support for this also needs to be added to the 3D renderers, for the sake of render-to-texture. Shouldn't be too difficult to add it to the compute renderer, but the old OpenGL renderer is another deal. I had designed that rather lazily, just streaming raw VRAM to the GPU, and never improved it. I could backport the texture cache the compute renderer uses, but it will take a while.

Mid-frame rendering

I need to add provisions in case certain things get changed mid-frame. I quickly did it for OAM, as shown by that iCarly screenshot above. The proof of concept works, but it can be improved upon, and extended to other things too.

The way this works is similar to the blargSNES hardware renderer. When certain state gets modified mid-frame, a section of the screen gets rendered with the old state - that section goes to the top of the screen, or wherever the previous section ended if there was any. Upon VBlank, we finish rendering in the same way.

There are exceptions for things that are very likely to be changed mid-frame (ie. window positions, BG scroll positions), and are relatively inexpensive to deal with. It's worth noting that on the DS, it's less frequent for video registers to be changed mid-frame, because the hardware is more flexible. By comparison, in something like a SNES game, you will see a lot more mid-frame state changes.

Either way, time will tell what is worth accounting for and what needs to be optimized.

Filtering

Hey, let's not forget why we came in here in the first place.

Hopefully, with the way the shaders are structured, it shouldn't be too hard to slot in filters. Bilinear, bicubic, HQX, xBRZ, you name it.

What I'm not too sure about is how well it'll work with sprites. It's not uncommon for games to splice together several small sprites to form bigger graphical elements, and I'm not sure how that'll work with filtering. We'll see.

Misc. things

The usual, a lot of code cleanup, optimization work, fixing up little details, and so on.

And work to make the code nicer to work with, which isn't particularly exciting.

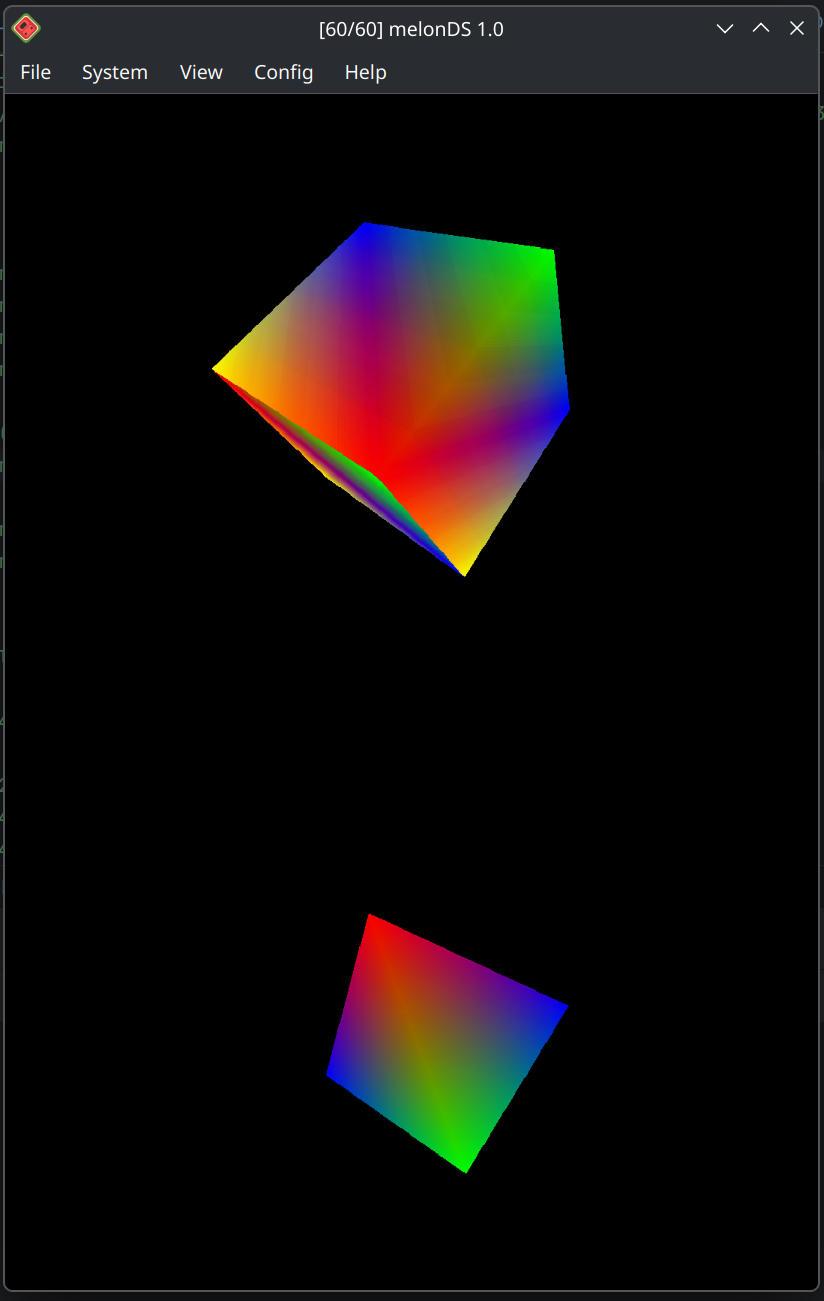

This gives you a rough idea of where things are at, and what's left to be done. To conclude, I'll leave you with an example of what happens when Arisotura goofs up her OpenGL calls:

Have fun!

Hardware rendering, the fun -- by Arisotura

This whole thing I'm working on gives me flashbacks from blargSNES. The goal and constraints are different, though. We weren't doing upscaling on the 3DS, but also, we had no fragment shaders, so we were much more limited in what we could do.

Anyway, these days, I'm waist-deep into OpenGL. I'm determined to go further than my original approach to upscaling, and it's a lot of fun too.

I might as well talk more about that approach, and what its limitations are.

First, let's talk about how 2D layers are composited on the DS.

There are 6 basic layers: BG0, BG1, BG2, BG3, sprites (OBJ) and backdrop. Sprites are pre-rendered and treated as a flat layer (which means you can't blend a sprite with another sprite). Backdrop is a fixed color (entry 0 of the standard palette), which basically fills any space not occupied by another layer.

For each pixel, the PPU keeps track of the two topmost layers, based on priority orders.

Then, you have the BLDCNT register, which lets you choose a color effect to be applied (blending or fade effects), and the target layers it may apply to. For blending, the "1st target" is the topmost pixel, and the "2nd target" is the pixel underneath. If the layers both pixels belong to are adequately selected in BLDCNT, they will be blended together, using the coefficients in the BLDALPHA register. Fade effects work in a similar fashion, except since they only apply to the topmost pixel, there's no "2nd target".

Then you also have the window feature, which can exclude not only individual layers from a given region, but can also disable color effects. There are also a few special cases: semi-transparent sprites, bitmap sprites, and the 3D layer. Those all ignore the color effect and 1st target selections in BLDCNT, as well as the window settings.

In melonDS, the 2D renderer renders all layers according to their priority order, and keeps track of the last two values for each pixel: when writing a pixel, the previous value is pushed down to a secondary buffer. This way, at the end, the two buffers can be composited together to form the final video frame.

I've talked a bit about how 3D upscaling was done: basically, the 3D layer is replaced with a placeholder. The final compositing step is skipped, and instead, the incomplete buffer is sent to the GPU. There, a compositor shader can sample this buffer and the actual hi-res 3D layer, and finish the work. This requires keeping track of not just the last two values, but the last three values for any given pixel: if a given 3D layer pixel turns out to be fully transparent, we need to be able to composite the pixels underneath "as normal".

This approach was good in that it allowed for performant upscaling with minimal modifications to the 2D renderer. However, it was inherently limited in what was doable.

It became apparent as I started to work on hi-res display capture. My very crude implementation, built on top of that old approach, worked fine for the simpler cases like dual-screen 3D. However, it was evident that anything more complex wouldn't work.

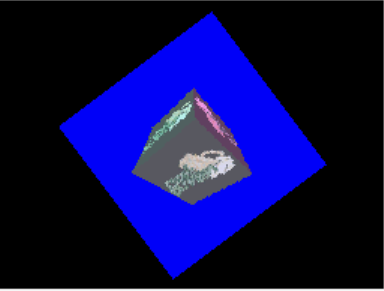

For example, in this post, I showed a render-to-texture demo that uses display capture. I also made a similar demo that renders to a rotating BG layer rather than a 3D cube:

And this is what it looks like when upscaled with the old approach:

Basically, when detecting that a given layer is going to render a display capture, the renderer replaces it with a placeholder, like for the actual 3D layer. The placeholder values include the coordinates within the source bitmap, and the compositor shader uses them to sample the actual hi-res bitmap.

The fatal flaw here is that this calculation doesn't account for the BG layer's rotation. Hence why it looks like shit. Linear interpolation could solve this issue, but it's just one of many problems with this approach.

Another big issue was filtering.

The basic reason is that when you're applying an upscaling filter to an image, for each given position within the destination image, you're going to be looking at not only the nearest pixel from the source image, but also the surrounding pixels, in an attempt at inferring the missing detail. For example, a bilinear filter works on a 2x2 block of source pixels, while it's 4x4 for a bicubic filter, and as much as 5x5 for xBRZ.

In our case, the different graphical layers are smooshed together into a weird 3-layer cake. This makes it a major pain to perform filtering: say you're looking at a source pixel from BG2, you'd want to find neighboring BG2 pixels, but they may be at different levels within the layer cake, or they may just not be part of it at all. All in all, it's a massive pain in the ass to work with.

Back in 2020, I had attempted to implement a xBRZ filter as a bit of a demo, to see how it'd work. I had even recorded a video of it on Super Princess Peach, and it was looking pretty decent... but due to the aforementioned issues, there were always weird glitches and other oddball issues, and it was evident that this was stretching beyond the limits of the old renderer approach. The xBRZ filter shader did remain in the melonDS codebase, unused...

-

So, basically, I started working on a proper hardware-accelerated 2D renderer.

As of now, I'm able to decode individual BG layers and sprites to flat textures. The idea is that doing so will simplify filtering a whole lot: instead of having to worry about the original format of the layer, the tiles, the palettes, and so on, it would just be matter of fetching pixels from a flat texture.

Here's an example of sprite rendering. They are first pre-rendered to an atlas texture, then they're placed on a hi-res sprite layer.

This type of renderer allows for other nifty improvements too: for example, hi-res rotation/scaling.

Next up is going to be rendering BG layers to similar hi-res layers. Once it's all done, the layers can be sent to the compositor shader and the job can be finished. I also have to think of provisions to deal with possible mid-frame setup changes. Anyone remember that midframe-OAM-modifying foodie game?

There will also be some work on the 3D renderers, to add support for things like render-to-texture, but also possibly adding 3D enhancements such as texture filtering.

-

I can hear the people already, "why make this with OpenGL, that's old, you should use Vulkan".

Yeah, OpenGL is no longer getting updates, but it's a stable and mature API, and it isn't going to be deprecated any time soon. For now, I see no reason to stop using it immediately.

However, I'm also reworking the way renderers work in melonDS.

Back then, Generic made changes to the system, so he could add different 2D renderers for the Switch port: a version of the software renderer that uses NEON SIMD, and a hardware-accelerated renderer that uses Deko3D.

I'm building upon this, but I want to also integrate things better: for example, figuring out a way to couple the 2D and 3D renderers better, and generally a cleaner API.

The idea is to also make it easier to implement different renderers. For example, the current OpenGL renderer is made with fast upscaling in mind, but we could have different renderers for mobile platforms (ie. OpenGL ES), that are first and foremost aimed at just being fast. Of course, we could also have a Vulkan renderer, or Direct3D, Metal, whatever you like.

melonDS 1.1 is out! -- by Arisotura

As promised, here is the new release: melonDS 1.1.

So, what's new in this release?

EDIT - there was an issue with the release builds that had been posted, so if your JIT option is greyed out and you're not using a x64 Mac, please redownload the release.

DSP HLE

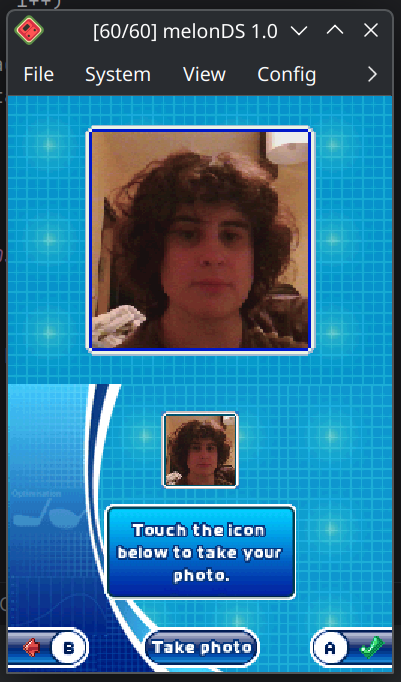

This is going to be a big change for DSi gamers out there.

If you've been playing DSi titles in melonDS, you may have noticed that sometimes they run very slow. Single-digit framerates. Wouldn't be a big deal if melonDS was always this slow, but obviously, it generally performs much better, so this sticks out like a sore thumb.

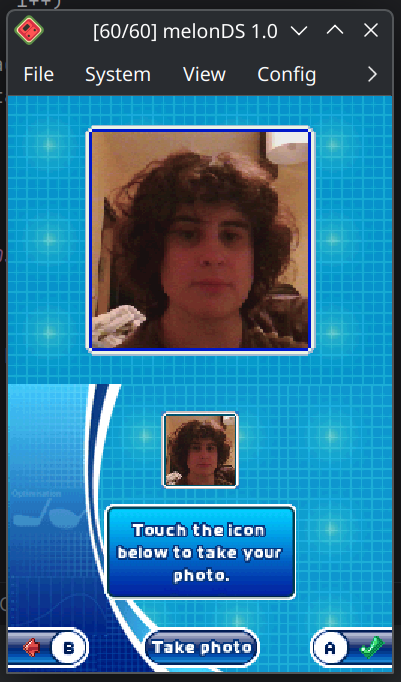

This is because those titles use the DSi's DSP. What is the DSP, you ask? A specific-purpose (read: weird) processor that doesn't actually do much besides being very annoying and resource-intensive to emulate. They use it for such tasks as downscaling pictures or playing a camera shutter sound when you take a picture.

With help from CasualPokePlayer, we were able to figure out the 3 main classes of DSP ucodes those games use, determine their functionality, and implement HLE equivalents in melonDS. Thus, those wonderful DSP features can be emulated without utterly wrecking performance.

DSP HLE is a setting, which you will find in the emulation settings dialog, DSi-mode tab. It is enabled by default.

Note that if it fails to recognize a game's DSP ucode, it will fall back to LLE. Similarly, homebrew ucodes will also fall back to LLE. There's the idea of adding a DSP JIT to help with this, but it's not a very high priority right now.

DSi microphone input

This was one of the last big missing features in DSi mode, and it is now implemented, thus further closing the gap between DS and DSi emulation in melonDS.

The way external microphone input works was also changed: instead of keeping your mic open at all times, melonDS will only open it when needed. This should help under certain circumstances, such as when using Bluetooth audio.

High-quality audio resampling

The implementation of DSP audio involved several changes to the way melonDS produces sound. Namely, melonDS used to output at 32 KHz, but with the new DSi audio hardware, this was changed to 47 KHz. I had added in some simple resampling, so melonDS would produce 47 KHz audio in all cases. But this caused audio quality issues for a number of people.

Nadia took the matter in her hands and replaced my crude resampler with a high-quality blip-buf resampler. Not only are all those problems eliminated, but it also means the melonDS core now outputs at a nice 48 KHz frequency, much easier for frontends to deal with than the previous weird numbers.

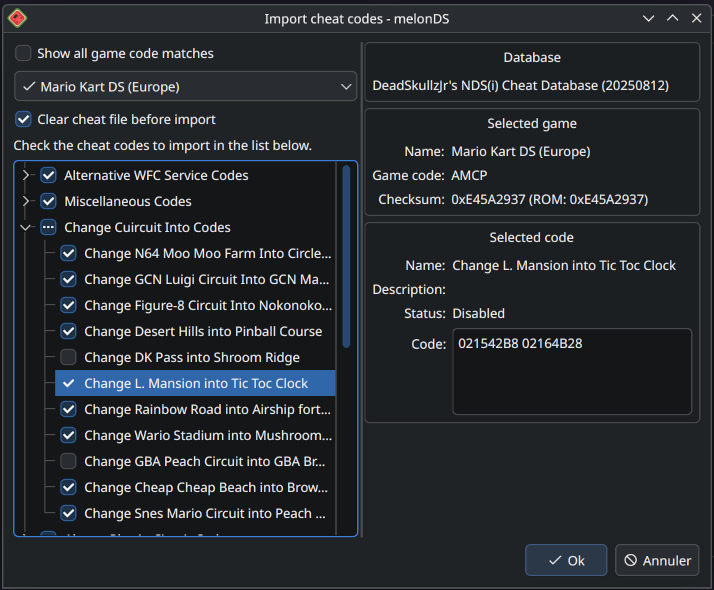

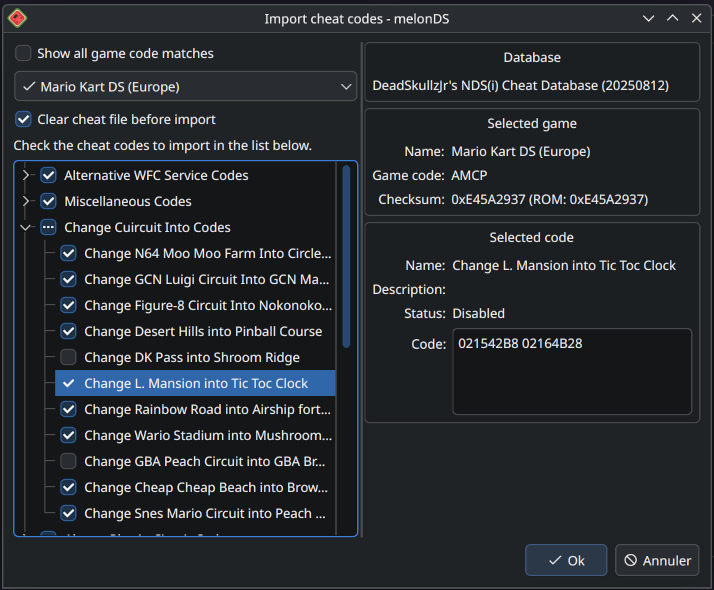

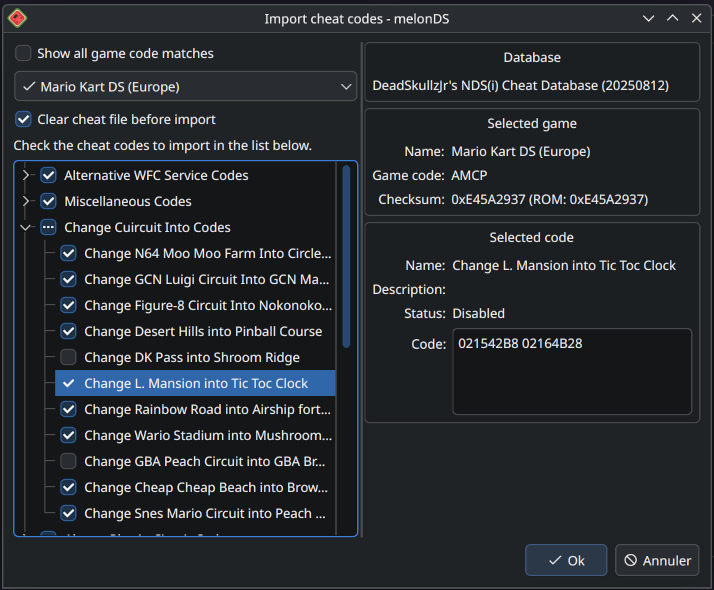

Cheat database support

If you've used cheats in melonDS, surely you've found it inconvenient to have to manually enter them into the editor. But this is no more: you can now grab the latest R4 cheat database (usrcheat.dat) for example, and import your cheat codes from that.

The cheat import dialog will show you which game entries match your current game, show the cheat codes they contain, and let you select which codes to import. You can also choose whether to clear any previously existing cheat codes or to keep them when importing new codes.

melonDS's cheat code system was also improved in order to fully preserve the structure found in usrcheat.dat. Categories and cheat codes can now have descriptions, categories have an option to allow only one code in them to be enabled, and codes can be created at the root, without having to be in a category.

The cheat file format (.mch) was also modified to add support for this. The parser is backwards-compatible, so it will recognize old .mch files just fine. However, new files won't be able to be recognized by older melonDS versions.

The cheat editor UI was also revamped to add support for the new functionality, and generally be more flexible and easier to work with. For example, it's now possible to reorder your cheat codes by moving them around in the list.

Compute shader renderer fix

Those of you who have tried the compute shader renderer may have noticed that it could start to glitch out at really high resolutions. This was due to running out of tile space.

We merged FireNX70's pull request, which implements tile size scaling in order to alleviate this problem. This means the renderer should now be able to go pretty high in resolution without issues.

Wayland OpenGL fix

If you use Wayland and have tried to use the OpenGL renderers, you may have noticed that it made the melonDS window glitchy, especially when using hiDPI scaling.

I noticed that glitch too, but had absolutely no idea where to start looking for a fix. So I kinda just... didn't use OpenGL, and put that on the backburner.

Until a while ago, when I felt like trying modern PCSX2. I was impressed by how smoothly it ran, compared to what it was like back in 2007... but more importantly, I realized that it was rendering 3D graphics in its main window alongside UI elements, that it uses Qt and OpenGL just like melonDS, and that it was flawless, no weird glitchiness.

So I went and asked the PCSX2 team about it. Turns out they originally took their OpenGL context code from DuckStation, but improved upon it. Funnily enough, melonDS's context code also comes from there. Small world.

In the end, the PCSX2 folks told me about what they did to fix Wayland issues. I tried one of the fixes that involved just two lines of code, and... it completely fixed the glitchiness in melonDS. So, thanks there!

BSD CI

We now have CI for FreeBSD, OpenBSD and NetBSD, courtesy Rayyan and Izder456. This means we're able to provide builds for those platforms, too.

Adjustments were also done to the JIT recompiler so it will work on those platforms.

Fixing a bunch of nasty bugs

For example: it has been reported that melonDS 1.0 could randomly crash after a while if multiple instances were opened. Kind of a problem, given that local multiplayer is one of melonDS's selling points. So, this bug has been fixed.

Another fun example, it sometimes occured that melonDS wouldn't output any sound, for some mysterious reason. As it was random and seemingly had a pretty low chance of occuring, I was really not looking forward to trying to reproduce and fix it... But Nadia saved the day by providing a build that exhibited this issue 100% of the time. With a reliable way to reproduce the bug, I was able to track it down and it was fixed.

Nadia also fixed another bug that caused possible crashes that appeared to be JIT-related, but turned out to be entirely unrelated.

All in all, melonDS 1.1 should be more stable and reliable.

There's also the usual slew of misc bugfixes and improvements.

However, we realized that there's a bug with the JIT that causes a crash on x86 Macs. We will do our best to fix this, but in the meantime, we had to disable that setting under that platform.

Future plans

The hi-res display capture stuff will be for release 1.2. Even if I could rush to finish it for 1.1, it wouldn't be wise. Something of this scope will need comprehensive testing.

I also have more ideas that will also be for further releases. I want to experiment with RTCom support, netplay, a different type of UI, ...

And then there's also changes I have in mind for this website. The current layout was nice in the early days, but there's a lot of posts now, and it's hard to find specific posts. I'd also want the homepage to present information in a more attractive manner, make it more evident what the latest melonDS version is, maybe have less outdated screenshots, ... so much to do.

Anyway, you can grab melonDS 1.1 on the downloads page, as usual.

You can also donate to the project if you want, that's always appreciated.

Hi-res display capture: we're getting there! -- by Arisotura

Sneak peek of the blackmagic3 branch:

(click them for full-res versions)

Those are both dual-screen 3D scenes, but notice how both screens are nice and smooth and hi-res.

Now, how far along are we actually with this?

As I said in the previous post, this is an improved version of the old renderer, which was based on a simple but limited approach. At the time, it was easy enough to hack that on top of the existing 2D engine. But now, we're reaching the limits of what is possible with this approach. So, consider this a first step. The second step will be to build a proper OpenGL-powered 2D engine, which will open up more crazy possibilities as far as graphical enhancements go.

I don't know if this first step will make it in melonDS 1.1, or if it will be for 1.2. Turns out, this is already a big undertaking.

I added code to keep track of which VRAM blocks are used for display captures. It's not quite finished, it's missing some details, like freeing capture buffers that are no longer in use, or syncing them with emulated VRAM if the CPU tries to access VRAM.

It also needs extensive testing and optimization. For this first iteration, for once, I tried to actually build something that works, rather than spend too much time trying to imagine the perfect design. So, basically, it works, but it's inefficient... Of course, the sheer complexity of VRAM mapping on the DS doesn't help at all. Do you remember? You can make the VRAM banks overlap!

So, yeah. Even if we end up making a new renderer, all this effort won't go to waste: we will have the required apparatus for hi-res display capture.

So far, this renderer does its thing. It detects when a display capture is used, and replaces it with an adequate hi-res version. For the typical use cases, like dual-screen 3D or motion blur, it does the job quite well.

However, I made a demo of "render-to-rotscale-BG": like my render-to-texture demo in the previous post, but instead of rendering the captured texture on the faces of a bigger cube, it is simply rendered on a rotating 128x128 BG layer. Nothing very fancy, but those demos serve to test the various possibilities display capture offers, and some games also do similar things.

Anyway, this render-to-rotscale demo looks like crap when upscaling is used. It's because the renderer's shader works with the assumption that display capture buffers will be drawn normally and not transformed. The shader goes from original-resolution coordinates and interpolates between them in order to sample higher-resolution images. In the case of a rotated/scaled BG layer, the interpolation would need to take the BG layer's transform matrix into account.

I decided to postpone this to the second step. Just think of the possibilites the improved renderer would offer: hi-res rotation/scale, antialiasing, filtering on layers and sprites, ...

And the render-to-texture demo won't even work for now. This one is tricky, it will require some communication between the 2D and 3D renderers. It might also require reworking the way texturing is done: for example my old OpenGL renderer just streams raw VRAM to the GPU and lets the shader do the decoding. It's lazy, but it was a simple way to get texturing working. But again, a proper texture cache here would open up more enhancement possibilities. Generic did use such a cache in his compute shader renderer, so it could probably serve for both renderers.

That's about it for what this renderer can do, for now. I also have a lot of cleanup and tying-loose-ends to do. I made a mess.

Stay tuned!

Display capture: oh, the fun! -- by Arisotura

This is going to be a juicy technical post related to what I'm working on at the moment.

Basically, if you've used 3D upscaling in melonDS, you know that there are games where it doesn't work. For example, games that display 3D graphics on both screens: they will flicker between the high-resolution picture and a low-resolution version. Or in other instances, it might just not work at all, and all you get is low-res graphics.

It's linked to the way the DS video hardware works. There are two 2D tile engines, but there is only one 3D engine. The output from that 3D engine is sent to the main 2D engine, where it is treated as BG0. You can also change which screen each 2D engine is connected to. But you can only render 3D graphics on one screen.

So how do those games render 3D graphics on both screens?

This is where display capture comes in. The principle is as follows: you render a 3D scene to the top screen, all while capturing that frame to, say, VRAM bank B. On the next frame, you switch screens and render a 3D scene to the bottom screen. Meanwhile, the top screen will display the previously captured frame from VRAM bank B, and the frame you're rendering will get captured to VRAM bank C. On the next frame, you render 3D graphics to the top screen again, and the bottom screen displays the capture from VRAM bank C. And so on.

This way, you're effectively rendering 3D graphics to both screens, albeit at 30 FPS. This is a typical use case for display capture, but not the only possiblity.

Display capture can receive input from two sources: source A, which is either the main 2D engine output or the raw 3D engine output, and source B, which is either a VRAM bank or the main memory display FIFO. Then you can either select source A, or source B, or blend the two together. The result from this will be written to the selected output VRAM bank. You can also choose to capture the entire screen or a region of it (128x128, 256x64 or 256x128).

All in all, quite an interesting feature. You can use it to do motion blur effects in hardware, or even to render graphics to a texture. Some games even do software processing on captured frames to apply custom effects. It is also used by the aging cart to verify the video hardware: it renders a scene and checksums the captured output.

For example, here's a demo of render-to-texture I just put together, based on the libnds examples:

(video file)

The way this is done isn't very different from how dual-screen 3D is done.

Anyway, this stuff is very related to what I'm working on, so I'm going to explain a bit how upscaling is done in melonDS.

When I implemented the OpenGL renderer, I first followed the same approach as other emulators: render 3D graphics with OpenGL, read out the framebuffer and send it to the 2D renderer. Simple. Then, in order to support upscaling, I just had to increase the resolution of the 3D framebuffer. To compensate for this, the 2D renderer would push out more pixels.

The issue was that it was suboptimal: if I pushed the scaling factor to 4x, it would get pretty slow. On one hand, in the 2D renderer, pushing out more pixels takes more CPU time. On the other hand, on a PC, reading back from GPU memory is slow. The overhead tends to grow quadratically when you increase the output resolution.

So instead, I went for a different approach. The 2D renderer renders at 256x192, but the 3D layer is replaced with placeholder values. This incomplete framebuffer is then sent to the GPU along with the high-resolution 3D framebuffer, and the two are spliced together. The final high-resolution output can be sent straight to the screen, never leaving GPU memory. This approach is a lot faster than the previous one.

This is what was originally implemented in melonDS 0.8. Since this rendering method bypassed the regular frame presentation logic, it was a bit of a hack - the final compositing step was done straight in the frontend, for example. The renderer in modern melonDS is a somewhat more refined version of this, but the same basic idea remains.

There is also an issue in this: display capture. The initial solution was to downscale the GPU framebuffer to 256x192 and read that back, so it could be stored in the emulated VRAM, "as normal". Due to going through the emulated VRAM, the captured frame has to be at the original resolution. This is why upscaling in melonDS has those issues.

To work around this, one would need to detect when a VRAM bank is being used as a destination for a display capture, and replace it with a high-resolution version in further frames, in the same way was the 3D layer itself. But obviously, it's more complicated than that. There are several issues. For one, the game could still decide to access a captured frame in VRAM (to read it back or to do custom processing), so that needs to be fulfilled. There is also the several different ways a captured frame can be reused: as a bitmap BG layer (BG2 or BG3), as a bunch of bitmap sprites, or even as a texture in 3D graphics. This is kinda why it has been postponed for so long.

There are even more annoying details, if we consider all the possibilities: while an API like OpenGL gives you an identifier for a texture, and you can only use it within its bounds, the DS isn't like that. When you specify a texture on the 3D engine, you're really just giving it a VRAM address. You could technically point it in the middle of a previously captured frame, or before... Tricky to work with. I made a few demos (like the aforementioned render-to-texture demo) to exercise display capture, but the amount of possibilities makes it tricky.

So I'm basically trying to add support for high-resolution display capture.

The first step is to make a separate 2D renderer for OpenGL, which will go with the OpenGL 3D renderers. To remove the GLCompositor wart and the other hacks and integrate the OpenGL rendering functionality more cleanly (and thus, make it easier to implement other renderers in the future, too).

I'm also reworking this compositor to work around the original limitations, and make it easier to splice in high-resolution captured frames. I have a pretty good roadmap as far as the 2D renderer is concerned. For 3D, I'll have to see what I can do...

However, there will be more steps to this. I'm acutely aware of the limitations of the current approach: for example, it doesn't lend itself to applying filters to 2D graphics. I tried in the past, but kept running into issues.

There are several more visual improvements we could add to melonDS - 2D layer/sprite filtering, 3D texture filtering, etc... Thus, the second step of this adventure will be to rework the 2D renderer to do more of the actual rendering work on the GPU. A bit like the hardware renderer I had made for blargSNES a decade ago. This approach would make it easier to apply enhancements to 2D assets or even replace them with better versions entirely, much like user texture packs.

This is not entirely unlike the Deko3D renderer Generic made for the Switch port.

But hey, one step at a time... First, I want to get high-resolution capture working.

There's one detail that Nadia wants to fix before we can release melonDS 1.1. Depending how long this takes, and how long I take, 1.1 might include my improvements too. If not, that will be for 1.2. We'll see.

Happy birthday melonDS! -- by Arisotura

Those of you who know your melonDS lore know that today is a special day. melonDS is 9 years old!

...hey, I don't control this. 9 is brown. I don't make the rules.

Anyway, yeah. 9 years of melonDS. That's quite the achievement. Sometimes I don't realize it has been so long...

-

As far as I'm concerned, there hasn't been a lot -- 2025 has had a real shitty start for me. A lot of stuff came forward that has been really rough.

On the flip side, it has also been the occasion to get a fresh start. I was told about IFS therapy in March, and I was able to get started. It has been intense, but so far it has been way more effective than previous attempts at therapy.

I'm hopeful for 2026 to be a better year. I'm also going to hopefully get started with a new job which is looking pretty cool, so that's nice too.

-

As far as melonDS is concerned, I have several ideas. First of all, we'll release melonDS 1.1 pretty soon, with a nice bundle of fixes and improvements.

I also have ideas for further releases. RTCom support, netplay, ... there's a lot of potential. I guess we can also look at Retroachivements, since that seems to be popular request. There's also the long-standing issues we should finally address, like the lack of upscaling in dual-screen 3D scenes.

Basically, no shortage of things to do. It's just matter of actually doing them. You know how that goes...

I also plan some upgrades to the site. I have some basic ideas for a homepage redesign, for updating the information that is presented, and presenting it in a nicer way... Some organization for the blog would be nice too, like splitting the posts into categories: release posts, technical shito, ...

I also have something else in mind: adding in a wiki, to host all information related to melonDS. The FAQ, help pages specific to certain topics, maybe compatibility info, ...

And maybe, just maybe, a screenshot section that isn't just a few outdated pics. Maybe that could go on the wiki too...

-

Regardless, happy birthday melonDS! And thank you all, you have helped make this possible!

The joys of programming -- by Arisotura

There have been reports of melonDS 1.0 crashing at random when running multiple instances. This is kind of a problem, especially when local multiplayer is one of melonDS's selling points.

So I went and tried to reproduce it. I could get the 1.0 and 1.0RC builds to crash just by having two instances open, even if they weren't running anything. But I couldn't get my dev build to crash. I thought, great, one of those cursed bugs that don't manifest when you try to debug them. I think our team knows all about this.

Not having too many choices, I took the 1.0 release build and started hacking away at it with a hex editor. Basic idea being, if it crashes with no ROM loaded, the emulator core isn't the culprit, and there aren't that many places to look. So I could nop out function calls until it stopped crashing.

In the end, I ended up rediscovering a bug that I had already fixed.

The SDL joystick API isn't thread-safe. When running multiple emulator instances in 1.0, this API will get called from several different threads, since each instance gets its own emulation thread. I had already addressed this 2.5 months ago, by adding adequate locking.

I guess at the time of 1.0, it slipped through the cracks due to its random nature, as with many threading-related bugs.

Regardless, if you know your melonDS lore, you know what is coming soon. There will be a new release which will include this fix, among other fun shit. In the meantime, you can use the nightly builds.

Sneak peek -- by Arisotura

Just showing off some of what I've been working on lately:

This is adjacent to something else I want to work on, but it's also been in the popular request list, since having to manually enter cheat codes into melonDS isn't very convenient: would be much easier to just import them from an existing cheat code database, like the R4 cheat database (also known as usrcheat.dat).

It's also something I've long thought of doing. I had looked at usrcheat.dat and figured the format wasn't very complicated. It was just, you know, actually making myself do it... typical ADHD stuff. But lately I felt like giving it a look. I first wrote a quick PHP parser to make sure I'd gotten the usrcheat.dat format right, then started implementing it into melonDS.

For now, it's in a separate branch, because it's still quite buggy and unfinished, but it's getting there.

The main goal is complete, which is, it parses usrcheat.dat, extracts the relevant entries, and imports them into your game's cheat file. By default, it only shows the game entry which matches your game by checksum, which is largely guaranteed to be the correct one. However, if none is found, it shows all the game entries that match by game code.

It also shows a bunch of information about the codes and lets you choose which ones you want to import. By default, all of them will be imported.

This part is mostly done, besides some minor UI/layout bugs.

The rest is adding new functionality to the existing cheat code editor. I modified the melonDS cheat file format (the .mch files) to add support for the extra information usrcheat.dat provides, all while keeping it backwards compatible, so older .mch files will still load just fine. But, of course, I also need to expose that in the UI somehow.

I also found a bug: if you delete a cheat code, the next one in the list gets its code list erased, due to the way the UI works. Not great.

I'm thinking of changing the way this works: selecting a cheat code would show the information in disabled/read-only fields, you'd click an "Edit" button to modify those fields, then you'd get "Save" or "Cancel" buttons... This would avoid much of the oddities of the current interface.

The rest is a bunch of code cleanup...

Stay tuned!

Not much to say lately... -- by Arisotura

Yeah...

I guess real life matters don't really help. Atleast, my mental health is genuinely improving, so there's that.

But as far as my projects are concerned, this seems to be one of those times where I just don't feel like coding. Well, not quite, I have been committing some stuff to melonDS... just not anything that would be worthy of a juicy blog post. I also had another project: porting my old SNES emulator to the WiiU gamepad. I got it to a point where it runs and displays some graphics, but for now I don't seem motivated to continue working on it...

But I still have some ideas for melonDS.

One idea I had a while ago: using the WFC connection presets to store settings for different altWFC servers. For example, using connection 1 for Wiimmfi, connection 2 for Kaeru, etc... and melonDS would provide some way of switching between them. I don't know how really useful it would be, or how feasible it would be wrt patching requirements, but it could be interesting.

Another idea would be RTCom support. Basically RTCom is a protocol that is used on the 3DS in DS mode to add support for analog sticks and such. It involves game patches and ARM11-side patches to forward input data to RTC registers. The annoying aspect of this is that each game seems to have its own ARM11-side patch, and I don't really look forward to implementing a whole ARM11 interpreter just for this.

But maybe input enhancements, be it RTCom stuff or niche GBA slot addons, would make a good use case for Lua scripting, or some kind of plugin system... I don't know.

There are other big ideas, of course. The planned UI redesign, the netplay stuff, website changes, ...

Oh well.

Fix for macOS builds -- by Arisotura

Not a lot to talk about these days, as far as melonDS is concerned. I have some ideas, some big, some less big, but I'm also being my usual ADHD self.

Anyway, there have been complaints about the macOS builds we distribute: they're distributed as nested zip files.

Apparently, those people aren't great fans of Russian dolls...

The issue is caused by the way our macOS CI works. I'd have to ask Nadia, but I think there isn't really an easy fix for this. So the builds available on Github will have to stay nested.

However, since I control this site's server, I can fix the issue here. So now, all the macOS builds on the melonDS site have been fixed, they're no longer nested. Similarly, new builds should also be fixed as they're uploaded. Let us know if anything goes wrong with this.

DSP HLE: reaching the finish line -- by Arisotura

In the last post, the last things that needed to be added to DSP HLE were the G711 ucode, and the basic audio functions common to all ucodes.

G711 was rather trivial to add. The rest was... more involved. Reason was that adding the new audio features required reworking some of melonDS's audio support.

melonDS was originally built around the DS hardware. As far as sound is concerned, the DS has a simple 16-channel mixer, and the output from said mixer is degraded to 10-bit and PWM'd to the speakers. Microphone input is even simpler: the mic is connected to the touchscreen controller's AUX input. Reading said input gives you the current mic level, and you need to set up a timer to manually sample it at the frequency you want.

So obviously, in the early days, melonDS was built around that design.

The DSi, however, provides a less archaic system for producing and acquiring sound.

Namely, it adds a TI audio amplifier, which is similar to the one found in the Wii U gamepad, for example. This audio amplifier also doubles as a touchscreen controller, for DS backwards compatibility.

Instead of the old PWM stuff, output from the DS mixer is sent to the amplifier over an I2S interface. There is some extra hardware to support that interface. It is possible to set the sampling frequency to 32 KHz (like the DS) or 47 KHz. There is also a ratio for mixing outputs from the DS mixer and the DSP.

Microphone input also goes through an I2S interface. The DSi provides hardware to automatically sample mic input at a preset frequency, and it's even possible to automate it entirely with a NDMA transfer. All in all, quite the upgrade compared to the DS. Oh, and mic input is also routed to the DSP, and the ucodes have basic functions for mic sampling too.

All fine and dandy...

So I based my work on CasualPokePlayer's PR, which implemented some of the new DSi audio functionality. I added support for mixing in DSP audio. The "play sound" command in itself was trivial to implement in HLE, once there was something in place to actually output DSP audio.

I added support for the different I2S sampling frequencies. The 47 KHz setting doesn't affect DS mixer output beyond improving quality somewhat, but it affects the rate at which DSP output plays.

For this, I changed melonDS to always produce audio at 47 KHz (instead of 32 KHz). In 32 KHz mode (or in DS mode), audio output will be resampled to 47 KHz. It was the easiest way to deal with this sort of feature.

Then, I added support for audio output to Teakra. I had to record the audio output to a file to check whether it was working correctly, because it's just too slow with DSP LLE, but a couple bugfixes later, it was working.

Then came the turn of microphone input. The way it's done on the DSP is a bit weird. Given the "play sound" command will DMA sound data from ARM9 memory, I thought the mic commands would work in a similar way, but no. Instead, they just continually write mic input to a circular buffer in DSP memory, and that's about it. Then you need to set up a timer to periodically read that circular buffer with PDATA.

But there was more work to be done around mic input.

I implemented a centralized hub for mic functionality, so buffers and logic wouldn't be duplicated in several places in melonDS. I also changed the way mic input data is fed into melonDS. With the hub in place, I could also add logic for starting and stopping mic recording. This way, when using an external microphone, it's possible to only request the mic when the game needs it, instead of hogging it all the time.

Besides that, it wasn't very hard to make this new mic input system work, including in DSi mode. I made some changes to CasualPokePlayer's code to make it more accurate to hardware. I also added the mic sampling commands to DSP HLE, and added BTDMP input support to Teakra so it can also receive mic input.

The tricky part was getting input from an external mic to play nicely and smoothly, but I got there.

There is only one problem left: libnds homebrew in DSi mode will have noisy mic input. This is a timing issue: libnds uses a really oddball way to sample the DSi mic (in order to keep it compatible with the old DS APIs), where it will repeatedly disable, flush and re-enable the mic interface, and wait to receive one sample. The wait is a polling loop with a timeout counter, but it's running too fast on melonDS, so sometimes it doesn't get sampled properly.

So, this is mostly it. What's left is a bunch of cleanup, misc testing, and adding the new state to savestates.

DSP HLE is done. It should allow most DSP titles to play at decent speeds, however if a game uses an unrecognized DSP ucode, it will fall back to LLE.

The dsp_hle branch also went beyond the original scope of DSP HLE, but it's all good.

DSi mode finally gets microphone input. I believe this was the last big missing feature, so this brings DSi mode on par with DS mode.

The last big thing that might need to be added would be a DSP JIT, if there's demand for this. I might look into it at some point, would be an occasion to learn new stuff.

I'm thinking those changes might warrant a lil' release.

Having fun with DSP HLE -- by Arisotura

If you pay attention to the melonDS repo, you might have noticed the new branch: dsp_hle.

And this branch is starting to see some results...

These screenshots might not look too impressive at first glance, but pay closer attention to the framerates. Those would be more like 4 FPS with DSP LLE.

Also, we still haven't fixed the timing issue which affects the DSi sound app -- I had to hack the cache timings to get it to run, and I haven't committed that. We'll fix this in a better way, I promise.

So, how does this all work?

CasualPokePlayer has done research on the different DSP ucodes in existence. So far, three main classes have been identified:

• AAC SDK: provides an AAC decoder. The DSi sound app uses an early version of this ucode. It is also found in a couple other titles.

• Graphics SDK: provides functions for scaling bitmaps and converting YUV pictures to 15-bit RGB.